Latent Handstyles

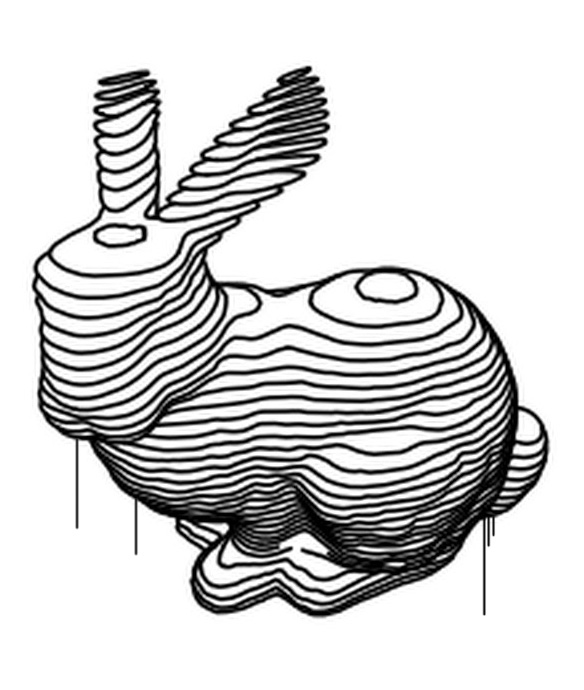

In this work I explore the use of the Contrastive Language-Image Pre-training (CLIP) model to drive the generation of traces that resemble graffiti tags. The CLIP model encodes images and their corresponding text-captions in a shared latent space. Here I am “exploring” this space in conjunction with an adaptation of the Sigma-Lognormal model of handwriting movements, which is known to work well in reproducing the kinematics (position, speed, acceleration, etc…) of human handwriting. By integrating this model with a differentiable vector graphics (DiffVG) renderer, it is possible to optimize the parameters describing a smooth movement with respect to an image objective such as CLIP. The results here are given by a text prompt that attempts to guide the output towards writing the word "CREAM".

The technique used in this work is described in two papers:

- Berio, Calinon & Plamondon et al. (2025) Differentiable Rasterization of Minimum-Time Sigma-Lognormal Trajectories. [link]

- Berio, Clivaz & Stroh et al. (2025) Image-Driven Robot Drawing with Rapid Lognormal Movements.

The video above (click if it does not autoplay) displays the evolution of different motions generated by the system, rendered with a procedural brush and concatenated into a seamless loop. The same motions can be used to guide a robot:

Daniel Berio

Daniel Berio